WHY COMPILED

Faster

The slowest part of your algorithm will often dominate how fast your algorithm will be. We compile your algorithm directly to machine code so your algorithm won’t be bogged down by a slow interpreter.

Our math libraries also make heavy use of lazy evaluation, memoization, and copy-on-write semantics to further improve performance and reduce memory footprint.

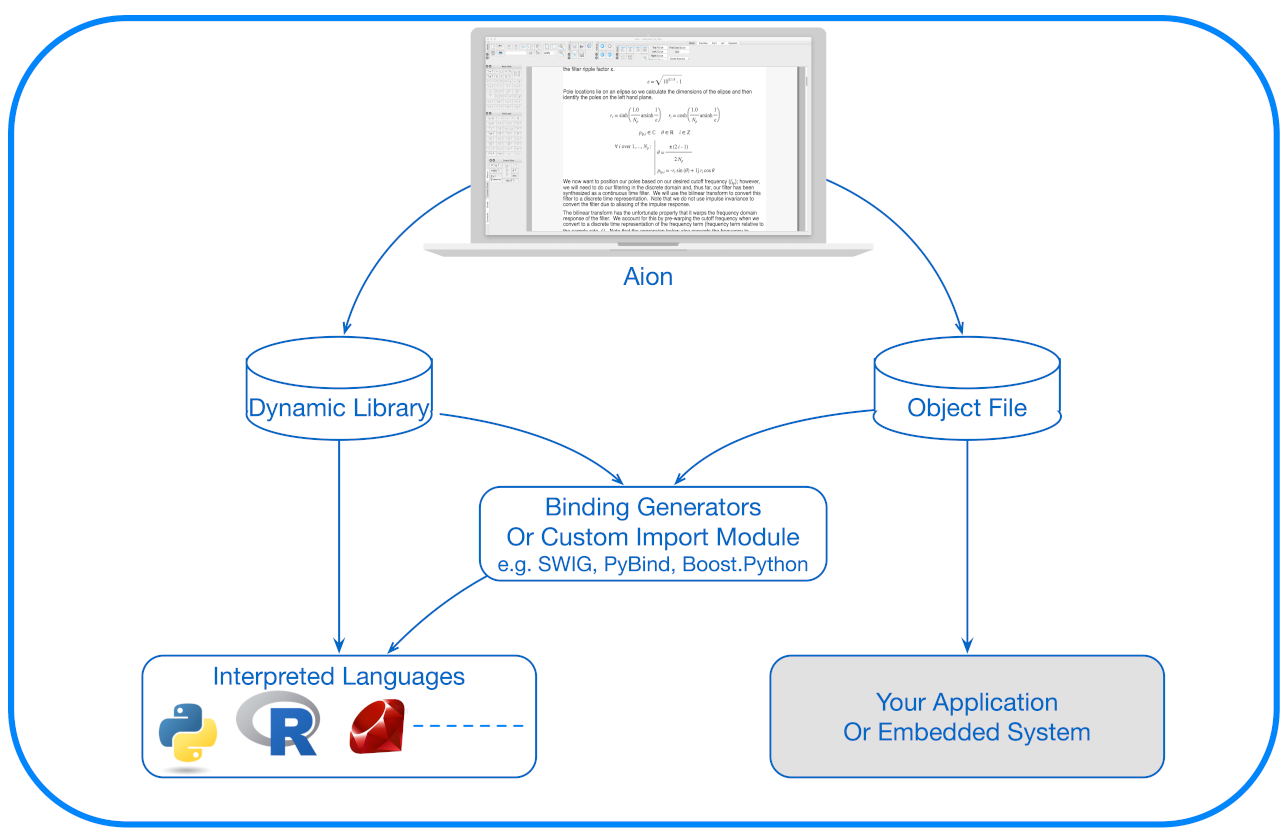

Better Integration

You can export your algorithm as a dynamic library or object file that you can integrate directly into other projects.

- No need to rewrite your algorithm in a compiled language like FORTRAN, C, or C++.

- No interpreter needs to be bundled with your code.

- Easily integrate with interpreted languages using SWIG, your own code, or our upcoming generic language bindings.

- Our well documented C++ API is designed to be simple to use.

Benchmarks

| Type Of Algorithm | Improvement |

| Matrix Operations | 20% Faster |

|

Typical: Uses mix of math functions, basic operations, and large data structures |

4 × Faster |

| Basic Math Heavy: No use of math library, only simple data types | 40 × Faster |

Benchmarks against CPython Version 3.8 and NumPy 1.19.2 on a 3 GHz 8-Core Intel Xeon E5. Algorithms used, in the same order as the table, were:

- Repeated multiplication of two 1000×1000 matricies

- Sieve Of Eratosthenes algorithm

- Identification of random large primes by integer factorization